Deep-learning to Predict Perceived Differences to a Hidden Reference Image

Pacific Graphics 2019

Mojtaba Bemana1

Joachim Keinert 2

Karol Myszkowski1

Michel Bätz 2

Matthias Ziegler 2

Hans-Peter Seidel1

Tobias Ritschel3

|

|

|

| 1 MPI Informatik |

2 Fraunhofer IIS |

3 University College London |

Abstract

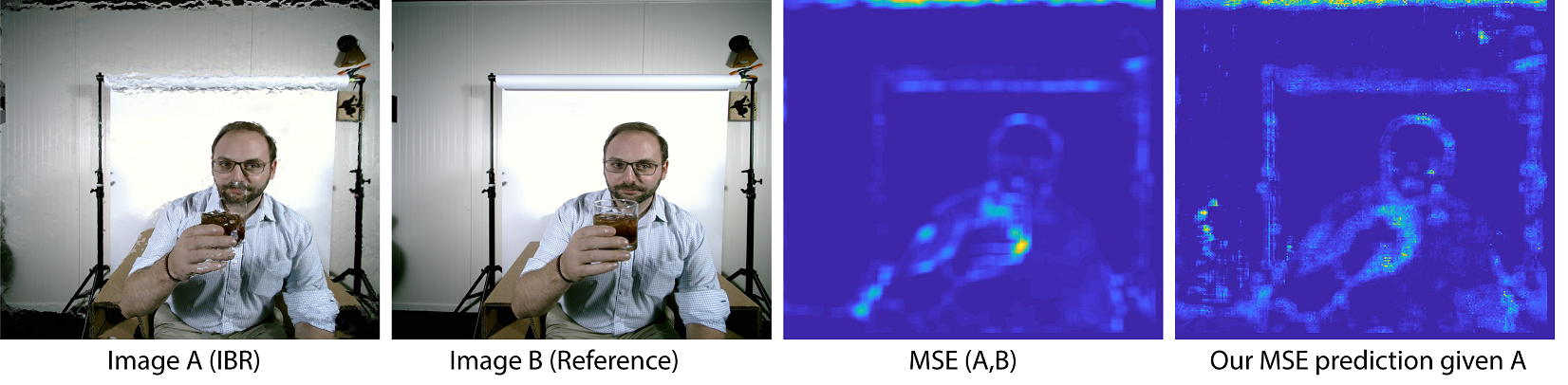

Image metrics predict the perceived per-pixel difference between a reference image and its degraded (e.g. re-rendered) version.

In several important applications, the reference image is not available and image metrics cannot be applied.

We devise a neural network architecture and training procedure that allows predicting the MSE, SSIM or VGG16 image difference from the distorted image alone while the reference is not observed.

This is enabled by two insights:

The first is to inject sufficiently many un-distorted natural image patches, which can be found in arbitrary amounts and are known to have no perceivable difference to themselves.

This avoids false positives.

The second is to balance the learning, where it is carefully made sure that all image errors are equally likely, avoiding false negatives.

Surprisingly, we observe, that the resulting no-reference metric, subjectively, can even perform better than the reference-based one, as it had to become robust against mis-alignments.

We evaluate the effectiveness of our approach in an image-based rendering context, both quantitatively and qualitatively.

Finally, we demonstrate two applications which reduce light field capture time and provide guidance for interactive depth adjustment.

Materials

Interactive Comparisons

Distorted image A | Hidden reference image B |

![]() |

![]() |

| The image input to our method and the reference metric. By clicking on the image, the differences will be visible. | The clean image, i.e. A, without artifacts. Not input to our method.

|

Our response on A | GT response on A | Our response on B |

![]() |

![]() |

![]() |

| This is our attempt to emulate the GT response on A (seen right of it). Ideally structures and magnitude are the same. | The original metric response on the tuple A, B. We try to reproduce its structures without seeing B. |

Our response on B, the clean image. As there are no artifacts, the metric should also not report any. Ideally the output is blue, i.e., zero, without false positives. |

Our perceptualized response on A | Perceptualized metric response on A | GT user annotation on A |

![]() |

![]() |

![]() |

| The same as our response on A above, but after a linear fit to user responses. Ideally, this should be close to the user anotations.

It is less relevant if it similar to the perceptualized metric response. | The same as the metric response on A above, but after a linear fit to user responses. Ideally, this should be close to the user anotations. |

The user annotation from 10 subjects. |

Our on shift(B) | Metric on shift(B), B |

![]() |

![]() |

| A image shift does not create errors. A successful method will have a null response, on a clean shifted image B. This is often the case. | A image shift does not create errors. A successful method will have a null response, on a clean shifted image B.

This is often not the case. |

Our on shift(A) | Metric on shift(A), A |

![]() |

![]() |

| Still, when an image with error is shifted, the error must not be 0 but remain as a true positive. So this image should look like a shifted copy of the non-shifted error map. | Repeating this with a classic metric also produces a shifted response. |

Histogram |

![]() Metric Response Metric Response |

|

Citation

Mojtaba Bemana, Joachim Keinert, Karol Myszkowski, Michel Bätz, Matthias Ziegler, Hans-Peter Seidel, Tobias Ritschel

Learning to Predict Image-based Rendering Artifacts with Respect to a Hidden Reference Image

Computer Graphics Forum (Proc. Pacific Graphics 2019)

@article{Bemana2019,

author = {Mojtaba Bemana, Joachim Keinert, Karol Myszkowski, Michel Bätz, Matthias Ziegler,

Hans-Peter Seidel, Tobias Ritschel},

title = {Learning to Predict Image-based Rendering Artifacts with Respect to a Hidden Reference Image},

journal = {Computer Graphics Forum (Proc. Pacific Graphics)},

year = {2019},

volume = {38},

issue = {7},

}

Acknowledgements

This work was partly supported by the Fraunhofer-Max Planck cooperation program within the framework of the German pact for research and innovation (PFI) and a Google AR/VR Research Award.

We acknowledge Fraunhofer IIS, Stanford, Google, and Technicolor institute for providing us the light field datasets.